Author: admin

What is MERN?

The MERN stack is a set of frameworks and tools used for developing a software product. They are very specifically chosen to work together in creating a well-functioning software (see a MERN app code at the post bottom).

A Full Stack Todo App

In this post we are presenting you a Full-Stack Todo App, which is built in React.js, Next.js and Sequelize Sqlite3, and it is responsively implemented according to different screen sizes (see the code at the post bottom).

AirTable scrape challenge

The problem is that data are loaded highly dynamically. HTML contains only the information that you currently see on the browser screen.

If there are a lot of records then it is difficult to collect such a table. One of the possible ways is to calculate the size of the screen and rows in the table. Then using the browser automation and use to make a script that will scroll through it bit by bit and collect data.

Is there any other feasible way to get data of a table? For example there is a HTTP requests coding way to get dynamic data.

JS infinite scroll does not work for AirTable either.

Please comment down here if having some tips, hints.

Companies like Facebook owner Meta, e-commerce behemoth Amazon and ride-hailing firm Lyft have announced job cuts in recent weeks as the US tech industry deals with an uncertain economic climate.

DW, 01.12.2022

Data Set

So, we’ve got lists (as a data directory) of those laid off tech professionals (from open sources). If someone is interesting in the data, we would gladly share it for a cost (starting from $5 per entry). The filtering by role, expertise and location is available.

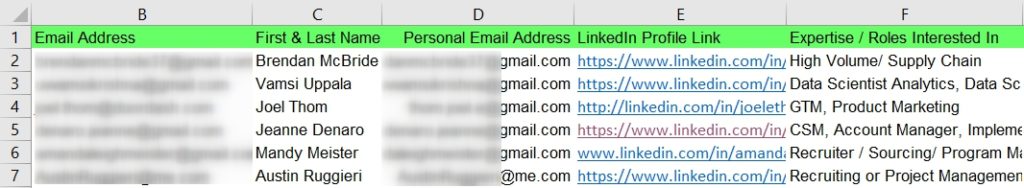

Sample

Take a look at a data sample (no emails) below.

Please enquire me at: igor [dot] savinkin [at] gmail [dot] com for a customized data set.

We’ve already stated some Tips and Tricks of scraping business directories or data aggregators sites. Yet recently someone has asked us to do aggregators’ scraping in the context of Google Sheets and/or MS Excel.

We’ve got some code provided by Akash D. working on ticketmaster.co.uk. He automates browser (Chrome as well as Edge) using Selenium with Python. The rotating authenticated proxies are leveraged to keep undetected. Yet, the site is protected with Distil network.

Recently we encountered a website that worked as usual, yet when composing and running scraping script/agent it has put up blocking measures.

In this post we’ll take a look at how the scraping process went and the measures we performed to overcome that.

In this post I want to share on how one may scrape business directory data, real estate using Scrapy framework.

PHP Curl POSTing JSON example

<?php

$base_url = "https://openapi.octoparse.com";

$token_url = $base_url . '/token';

$post =[

'username' => 'igorsavinkin',

'password' => '<xxxxxx>',

'grant_type' => 'password'

];

$payload = json_encode($post);

$headers = [

'Content-Type: application/json' ,

'Content-Length: ' . strlen($payload)

];

$timeout = 30;

$ch_upload = curl_init();

curl_setopt($ch_upload, CURLOPT_URL, $token_url);

if ($headers) {

curl_setopt($ch_upload, CURLOPT_HTTPHEADER, $headers);

}

curl_setopt($ch_upload, CURLOPT_POST, true);

curl_setopt($ch_upload, CURLOPT_POSTFIELDS, $payload /*http_build_query($post)*/ );

curl_setopt($ch_upload, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch_upload, CURLOPT_CONNECTTIMEOUT, $timeout);

$response = curl_exec($ch_upload);

if (curl_errno($ch_upload)) {

echo 'Curl Error: ' . curl_error($ch);

}

curl_close($ch_upload);

//echo 'Response length: ', strlen($response);

echo $response ;

$fp = fopen('octoparse-api-token.json', 'w') ;

fwrite($fp, $response );

fclose($fp);