The original AWSCodeStarFullAccess policy is full [to provide access to AWS CodeStar via the AWS Management Console] yet it still does not grant enough access to create a Code connection for IAM at CodePipeline. So we had to manage to create a custom policy based on AWS tutorial suggestions.

Recently we’ve got a tricky website of dynamic content to scrape. The data are loaded thru XHRs into each part of the DOM (HTML markup). So, the task was to develop an effective scraper that does async while using reasonable CPU recourses.

| Service | Residential | Cost/month | Traffic/month | $ per GB | Rotating | IP whitelisting | Performance and more | Notes |

|---|---|---|---|---|---|---|---|---|

| MarsProxies | N/A | N/A | 3.5 | yes | yes | 500K+ IPs, 190+ locations Test results | SOCKS5 supported Proxy grey zone restrictions are applied. |

|

| Oxylabs.io | N/A | 25 GB | 9 - 12 "pay-as-you-go" - 15 | yes | yes | 100M+ IPs, 192 countries - 30K requests - 1.3 GB of data - 5K pages crawled | Not allowing to scrape restricted targets, incl. Linkedin. |

|

| 2captcha.com | yes | N/A | N/A | 3 - 6 | yes | no | 90M+ IPs, 220+ countries | |

| Smartproxy | Link to the price page | N/A | 5.2 - 7 "pay-as-you-go" - 8.5 | yes | yes | 65M+ IPs, 195+ countries | Free Trial Not allowing to scrape some of grey zone targets, incl. Linkedin. |

|

| Infatica.io | N/A | N/A | 3 - 6.5 "pay-as-you-go" - 8 | yes | yes | Over 95% success *Bans from Cloudflare are also few, less than 5%. | Black list of sites —> proxies do not work with those.

|

|

| Mango Proxy | N/A | 1-50 GB | 3-8 "pay-as-you-go" - 8 "pay-as-you-go" - 8 | yes | yes | 90M+ IPs, 240+ countries |  |

|

| IPRoyal | N/A | N/A | $4.55 | yes | yes | 32M+ IPs, 195 countries | Not allowing to scrape some of grey zone targets, incl. Facebook. List of bloked sites. | |

| Geonode.com | $50 | 100 GB | 0.5 "pay-as-you-go" - 5 | yes | yes | • 3'000 requests done with 5 threads for 1/2 hour • 95% successful requests | - easy to configure - lowest price in terms of subscription - simple and powerful, no hidden tricks - good technical support |

|

| Rainproxy.io | yes | $ 4 | from 1 GB | 4 | yes | |||

| BrightData | yes | 15 | ||||||

| ScrapeOps Proxy Aggregator | yes | API Credits per month | N/A | N/A | yes | Allows multithreading, the service provides browsers at its servers. It allows to run N [cloud] browsers from a local machine. The number of threads depends on the subscription: min 5 threads. | The All-In-One Proxy API that allows to use over 20+ proxy providers from a single API | |

| Lunaproxy.com | yes | from $15 | x Gb per 90 days | 0.85 - 5 | Each plan allows certain traffic amount for 90 days limit. | |||

| LiveProxies.io | yes | from $45 | 4-50 GB | 5 - 12 | yes | yes | Private IP allocation Tailored large plans for enterprises 10M IPs - 50 countries | Eg. 200 IPs with 4 GB for $70.00, for 30 days limit. |

| Charity Engine -docs | yes | - | - | starting from 3.6 Additionally: CPU computing - from $0.01 per avg CPU core-hour - from $0.10 per GPU-hour - source. | failed to connect so far | |||

| proxy-sale.com | yes | from $17 | N/A | 3 - 6 "pay-as-you-go" - 7 | yes | yes | 10M+ IPs, 210+ countries | 30 days limit for a single proxy batch |

| Tabproxy.com | yes | from $15 | N/A | 0.8 - 3 (lowest price is for a chunk of 1000 GB) | yes | yes | 200M+ IPs, 195 countries | 30-180 days limit for a single proxy batch (eg. 5 GB) |

| proxy-seller.com | yes | N/A | N/A | 4.5 - 6 "pay-as-you-go" - 7 | yes | yes | 15M+ IPs, 220 countries | - Generation up to 1000 proxy ports in each proxy list - HTTP / Socks5 support - One will be able to generate an infinite number of proxies by assigning unique parameters to each list |

| SwiftProxy.com | yes | from $15 | from 5 GB | 1.8-3 0.70 - Enterprise plan | yes | yes | 70M+ ethically sourced IPs 220+ countries | 500 MB residential traffic for free on sign up. |

Additional

Another proxy comparison in here.

Undetectable’s proxy partners.

Bot protected websites

What is MERN?

The MERN stack is a set of frameworks and tools used for developing a software product. They are very specifically chosen to work together in creating a well-functioning software (see a MERN app code at the post bottom).

A Full Stack Todo App

In this post we are presenting you a Full-Stack Todo App, which is built in React.js, Next.js and Sequelize Sqlite3, and it is responsively implemented according to different screen sizes (see the code at the post bottom).

AirTable scrape challenge

The problem is that data are loaded highly dynamically. HTML contains only the information that you currently see on the browser screen.

If there are a lot of records then it is difficult to collect such a table. One of the possible ways is to calculate the size of the screen and rows in the table. Then using the browser automation and use to make a script that will scroll through it bit by bit and collect data.

Is there any other feasible way to get data of a table? For example there is a HTTP requests coding way to get dynamic data.

JS infinite scroll does not work for AirTable either.

Please comment down here if having some tips, hints.

Companies like Facebook owner Meta, e-commerce behemoth Amazon and ride-hailing firm Lyft have announced job cuts in recent weeks as the US tech industry deals with an uncertain economic climate.

DW, 01.12.2022

Data Set

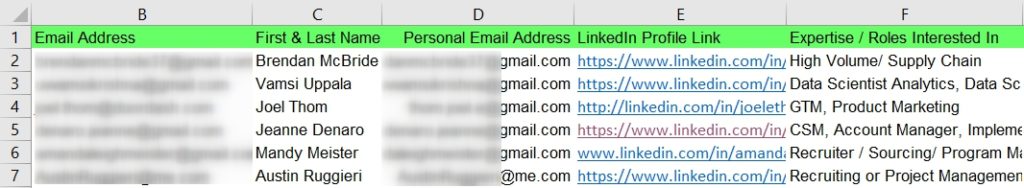

So, we’ve got lists (as a data directory) of those laid off tech professionals (from open sources). If someone is interesting in the data, we would gladly share it for a cost (starting from $5 per entry). The filtering by role, expertise and location is available.

Sample

Take a look at a data sample (no emails) below.

Please enquire me at: igor [dot] savinkin [at] gmail [dot] com for a customized data set.

We’ve already stated some Tips and Tricks of scraping business directories or data aggregators sites. Yet recently someone has asked us to do aggregators’ scraping in the context of Google Sheets and/or MS Excel.

Recently we’ve performed the Yelp business directory scrape for acquiring high quality B2B leads (company + CEO info). This forced us to apply many techniques like proxying, external company site scrape, email verification and more.